In the heart of Oklahoma, where determination and ingenuity have shaped communities for generations, a new chapter of innovation is unfolding. Today in Oklahoma City, I stood with our team and cut the ribbon on Cerebras’ newest AI datacenter—a facility built not just to power artificial intelligence, but to shape its future.

Growing up, I often thought about my father, who was raised on a remote mining site in Australia. Life on a mine was hard—the work grueling, the distances vast—but it taught resilience, teamwork, and big dreams. His stories convinced me that transformative work doesn’t only happen in big cities. It happens wherever people are willing to roll up their sleeves and build something extraordinary together. Standing here in Oklahoma City, I feel that same spirit alive in this community.

Built for Breakthroughs

In 2023 we built our first in-house AI supercomputer, Andromeda—then the largest of its kind. Two years later, our new Oklahoma City facility is more than 44 exaflops of AI compute, and supports the largest models yet invented.

On AI-native workloads, Cerebras is 20–40× faster than top GPUs, serving models like Meta Llama, OpenAI GPT-OSS-120B, Qwen, and DeepSeek at 2,000–3,000 tokens per second. That speed is unlocking new ground in agentic development, real-time reasoning, and instant code generation.

In 2025:

- The fastest OpenAI model is the one that runs on Cerebras.

- The fastest Meta Llama model runs on Cerebras.

- The fastest Code-Gen model runs on Cerebras.

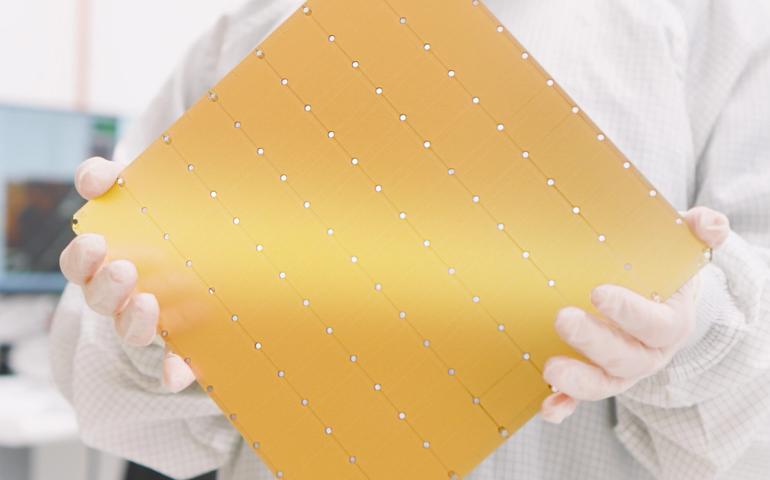

These extraordinary performance results are a consequence of Cerebras’ fundamental innovation in chip design. Our Wafer Scale Engine 3, is the largest processor ever built. It is 56 times larger than the largest GPU. With 4 trillion transistors and 900,000 AI cores, the WSE chews through even the most demanding AI workloads at speeds unmatched in the industry. Put simply, we are the fastest in the world bar none. And in the new AI economy speed is the fuel for growth.

Wafer-Scale Datacenters: More Tokens, Less Power

This moment in Oklahoma City builds on nearly a decade of relentless innovation. As we approach our 10-year anniversary, we’ve transformed AI infrastructure across North America—from early deployments in California to high-capacity builds in Dallas and Minneapolis, and additional sites across the continent—raising the bar for performance, reliability, and sustainability.

Our wafer-scale architecture avoids unnecessary data movement, radically reducing and in some cases eliminating completely cross-device communication and orchestration. The result: simpler scaling, higher throughput, and faster time-to-solution for open-source communities, foundation model developers, and sovereign AI initiatives. It’s a fundamentally different approach—and the engine of our advantage.

By avoiding unnecessary data movement, we also improve our power efficiency. For agentic, real-time reasoning and instant code-generation workloads, our systems use about one-third the power of the fastest GPUs.

Efficiency was foremost in our thinking at the chip as well as the system level.We designed our hardware for direct-to-chip water cooling from day one. Because water moves heat far more effectively than air, our loops cut fan and chiller work, let us run warmer rooms and cooler chips, and meaningfully reduce facility power for the same AI compute. GPU stacks are only now catching up with water in their latest generation.

Oklahoma at the Center of AI: Built in Partnership

Great projects still require trusted partners. Scale Datacenter prepared the site and infrastructure that helped us move quickly. As Scale CEO Trevor Francis put it:

“We are excited to partner with Cerebras to bring world-class AI infrastructure to Oklahoma City. Our collaboration with Cerebras underscores our commitment to empowering innovation in AI, and we look forward to supporting the next generation of AI-driven applications.”

From the start, we aimed to build a datacenter that respects its neighbors and the environment. Our closed-loop cooling minimizes water use, relying on outside air except on the hottest days. Every kilowatt-hour consumed here is matched with renewable energy added back to the grid. And we’ve worked closely with local utilities to maintain price stability and grid reliability so our growth benefits the whole community.

This datacenter has already created hundreds of good-paying jobs and will anchor long-term roles in engineering, IT, and operations. We’re laying the groundwork with local colleges and technical programs to build future pathways into high-demand datacenter careers, and we’re preparing to collaborate with businesses across the region so they can harness the capacity this site will provide.

Looking Forward

As I looked out at the crowd today, I thought of my father on that mine in Australia—and of the belief that no place is too remote, no idea too ambitious, to shape the future. Oklahoma City now stands at the center of something extraordinary. The breakthroughs that will define the next decade of AI—in medicine, science, energy, and beyond—will begin here. And I couldn’t be prouder to build that future alongside the people of Oklahoma.