At the Forefront

of AI Innovation

2015

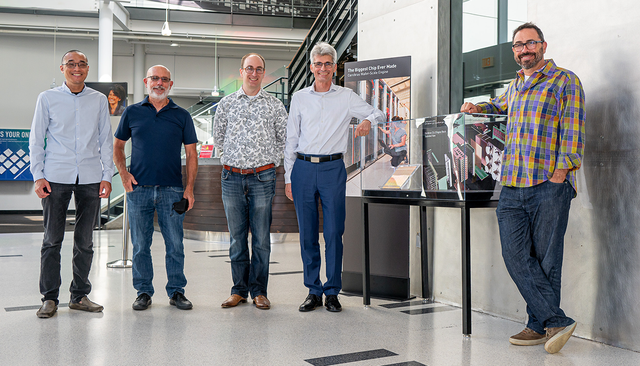

Cerebras is Founded

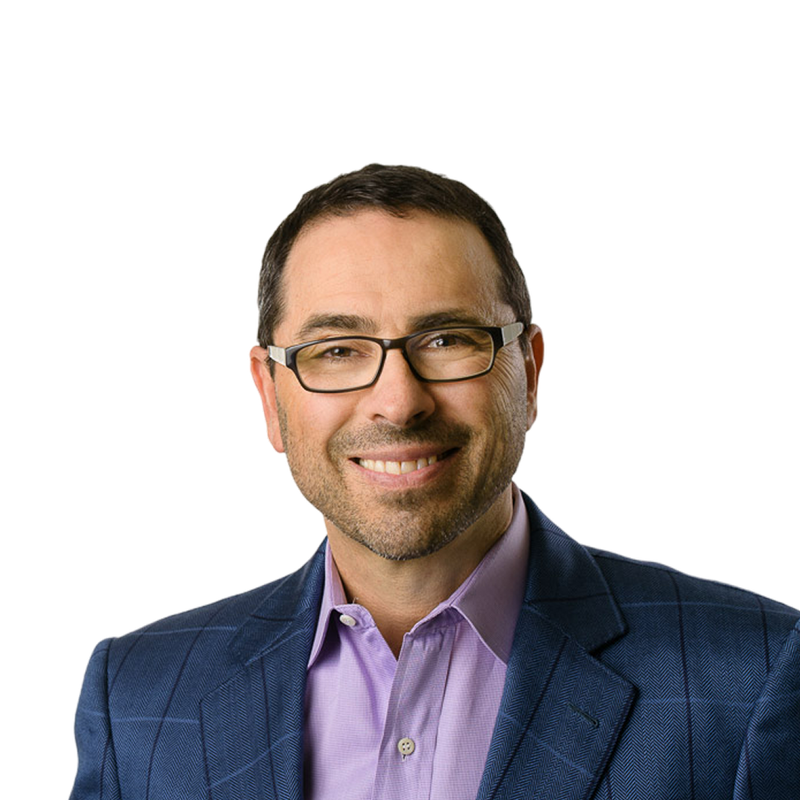

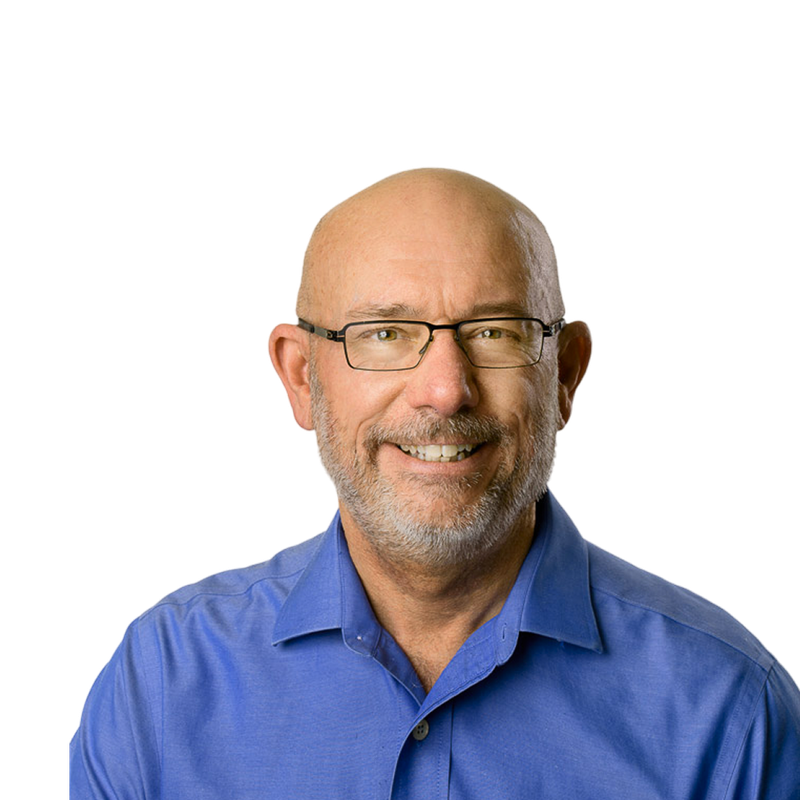

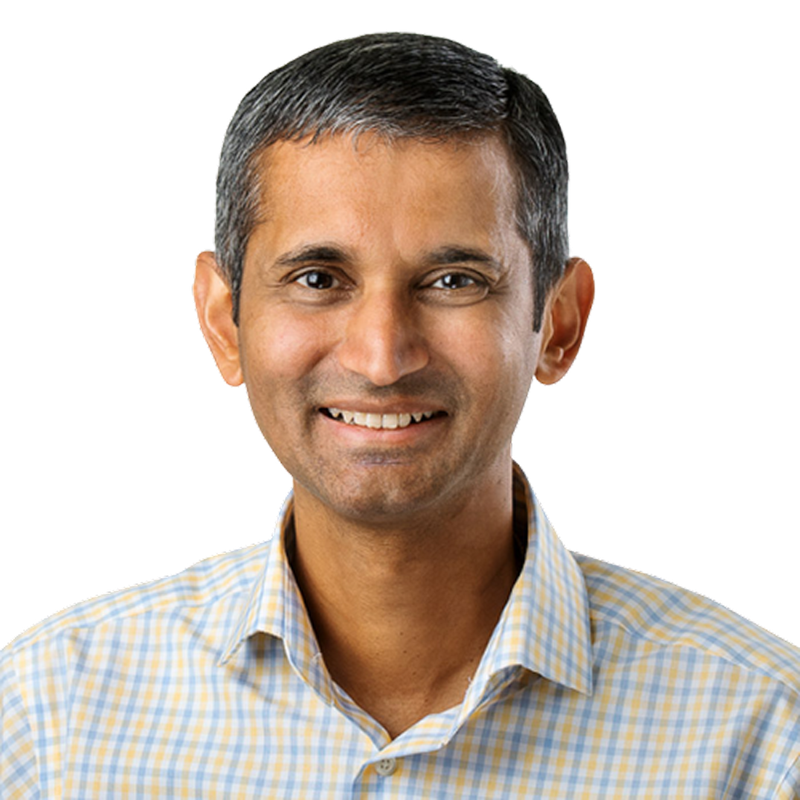

Andrew Feldman, Gary Lauterbach, Michael James, Sean Lie and Jean-Philippe Fricker launch Cerebras with the aim of bringing wafer-scale computing to market. At the time, it was unclear if such a feat was technically possible.

2019

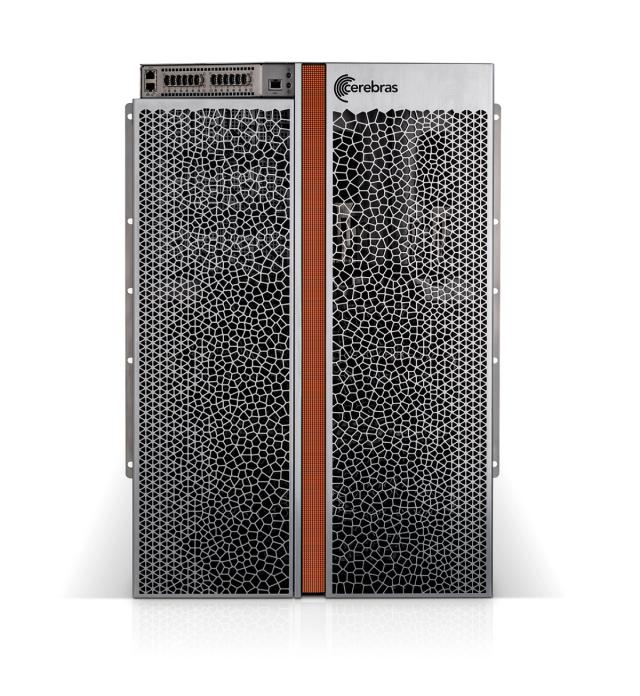

Launch of WSE-1 and CS-1

Cerebras introduces the WSE-1 and the CS-1 system, making our technology available in data centers worldwide.

2021

WSE-2 and CS-2 Debut

Teams at the National Energy Technology Laboratory (NETL) found that its CS-2 system was nearly 500X faster than NETL's Joule Supercomputer in field equation modeling.

2022

Gordon Bell Special Prize

Researchers at Argonne National Laboratory are awarded the Gordon Bell Special Prize for groundbreaking research into COVID-19 variants, powered by the Cerebras CS-2 cluster. This same year, the WSE-2 is honored by the Museum of Computing as an “epochal” achievement in chip-making.

2023

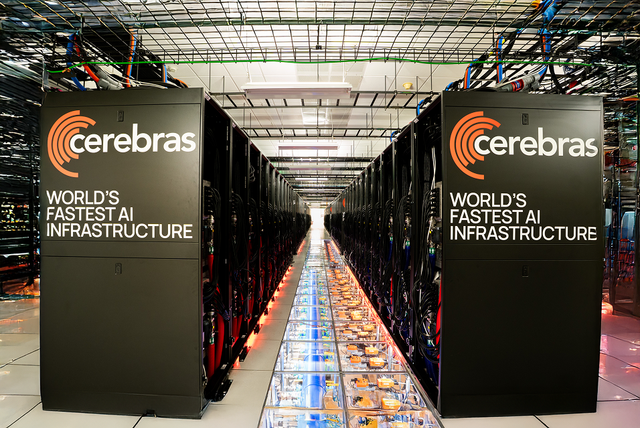

Condor Galaxy Network Unveiled

In partnership with G42, we introduce the Condor Galaxy network of supercomputers, powered by Cerebras systems. The Condor Galaxy 1 (CG-1) supercomputer features 4 exaFLOPs of FP16 performance and 54 million cores.

2024

WSE-3 and CS-3

With the introduction of the WSE-3 chip and CS-3 system, Cerebras shatters all benchmarks for AI inference and training, ushering in a new era of instantaneous AI applications and interactions.

2025

Speed Meets Scale

Accelerating adoption through record-breaking model performance—now powering Meta’s Llama API, Perplexity, Mistral, and leading integrations like Hugging Face and OpenRouter—Cerebras announced rapid datacenter expansion across North America and Europe that will make it world’s #1 provider of high-speed inference.