Introduction

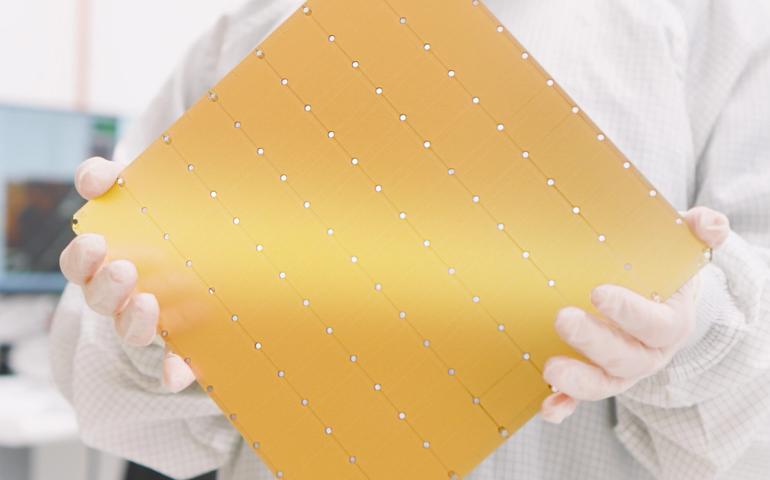

As the demand for scalable, low-latency large language model (LLM) inference surges, API platform providers face the challenge of delivering breakthrough performance while maintaining enterprise-grade security, governance, and ease of integration. The Cerebras API Certification Partner Program addresses these needs by enabling LLM API partners to seamlessly integrate Cerebras’s wafer-scale inference capabilities, validate their implementations against rigorous standards, and jointly bring next-generation AI services to market.

Program Vision

The program’s core objective is to democratize ultra-fast AI inference across the enterprise stack. By certifying API providers who meet strict performance, security, and operational criteria, Cerebras ensures that end users can rely on sub-50 ms inference at scale. Certified partners benefit from technical enablement, co-marketing, and a clear path to evolving from basic integration to full-stack, production-ready solutions.

Program Overview

The Cerebras API Certification Partner Program empowers LLM API platforms to integrate, validate, and jointly market ultra-fast inference on Cerebras wafer-scale hardware. Certified partners gain technical support, co-branding, go-to-market resources, and priority access to new features.

Certified by Cerebras

- Technical integration, optimization, and observability.

- Solution architect and enterprise support.

- Co-developed reference architectures and joint customer proof-of-concepts..

Eligibility & Requirements

- API Compatibility: Support REST/gRPC endpoints conforming to OpenAPI or OpenAI API standards.

- Performance Benchmarks:Demonstrate inference latency <150 ms or <50 ms under realistic loads.

- Security Compliance: Implement mTLS, OAuth 2.0/JWT, and audit logging.

- Operational Excellence: 99.9% uptime commitment, automated health checks, and CI/CD for model deployments.

- Joint GTM Commitment: Co-sell motions, participation in partner events, and dedicated partnership manager.

Partner Benefits

Technical Enablement

- Sandbox access to Cerebras Cloud API credentials and usage quotas.

- Architecture review sessions and performance profiling with Cerebras engineering teams.

- Best-practice guides, sample code, and reference deployments on Kubernetes and serverless frameworks.

Marketing & Sales Support

- Co-branded solution briefs, whitepapers, and datasheets.

- Joint webinars, workshops, and speaking opportunities at industry events.

- Lead sharing, access to Partner Resources Repository

- Marketing Development Funds

Exclusive Programs

- Invitation to the annual Cerebras Partner Summit (SuperNova) for strategic planning and networking.

- Early access to upcoming hardware releases (e.g., WSE-4) and software features.

- Certification Badgesfor partner websites and sales presentations.

Certification Process

1. Application & Onboarding

- Provide integration details, security attestations, and partner profile.

- Kick-off meeting with Cerebras Partner team.

2. Technical Validation

- Code review, API conformance tests benchmarking on WSE-3.

- Security and compliance audit.

3. Go-Live & Certification

- Joint launch announcementà Press Release

- Certified by Cerebras Partner Badge

- Enrollment in ongoing enablement and co-sell programs.

4. Ongoing Partnership

- Quarterly business reviews and continuous performance monitoring.

- Executive Leadership Connects, Fireside chats

By becoming a Cerebras API Certified Partner, LLM API providers unlock the ability to offer their customers the fastest, most scalable inference capabilities in the industry—backed by Cerebras’s world-leading hardware, expert support, and joint go-to-market momentum.

Contact Cerebras Partner Team

Saurabh Vyas – saurabh.vyas@cerebras.net