Rox provides enterprise revenue agents on top of your data warehouse. The platform uses a secure knowledge graph to combine internal and external sources of data, including all the raw data from customer interactions, your CRM, product usage, and more, plus public sources of data across the web.

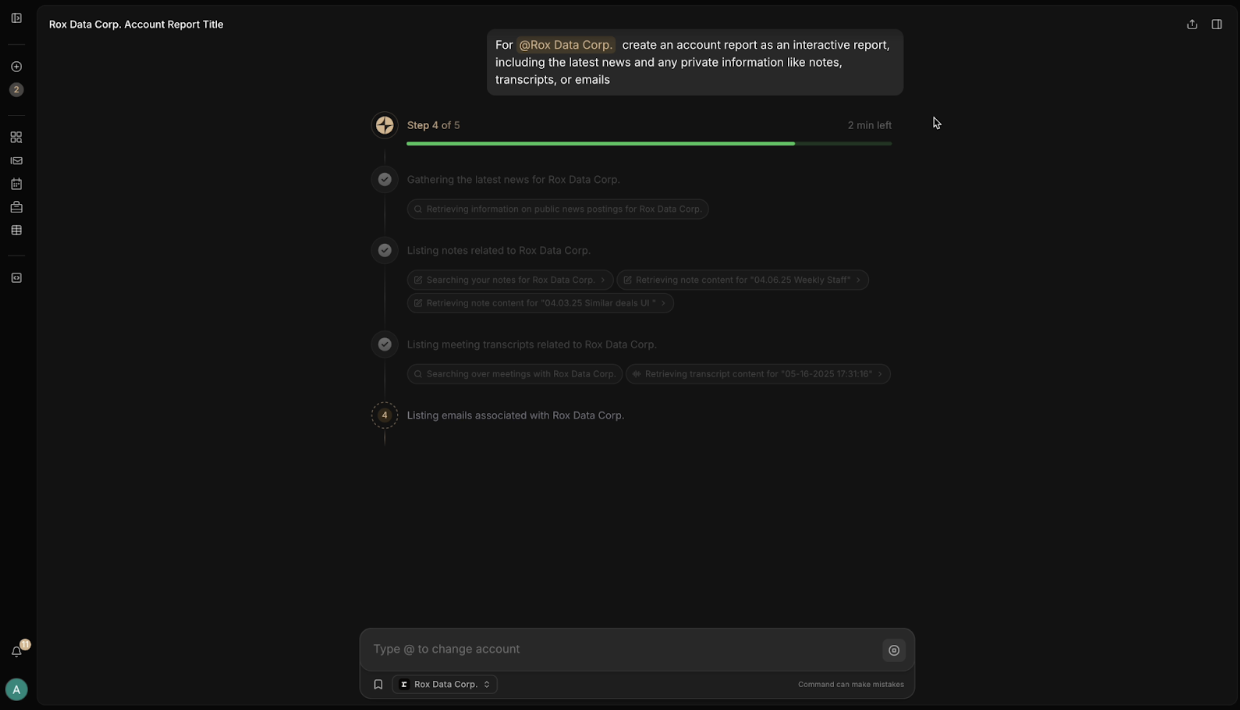

This data is then used by AI agents to automate go-to-market workflows. These workflows include everything a seller does in their day-to-day, from account research, autofilling RFPs, meeting prep, and outreach to monitoring deal risks and moving deals through the pipeline.

Users interact with Rox via web, Slack, macOS, iOS, and a conversational interface called Command.

The challenge

Rox orchestrates multiple AI agents specialized in high-accuracy, multi-step research. That rigor is valuable, but it can feel slow when users are on a sales call, reviewing a deal in Slack, or prepping on a phone. As adoption broadened beyond technical users, expectations converged on responsiveness. Voice raised the bar further: for hands-free workflows to be viable, round-trip latency must feel conversational.

The approach: route by intent, not ideology

Rox uses dynamic model routing to match the job to the right inference path:

- Fast path (user waiting). Command chat/voice, instant refresh in Research, quick factual lookups, and other interactions where the user is actively engaged. These flows prioritize low latency and high tokens/sec.

- Deep path (user not waiting). Bulk or complex jobs like account landscape scans, multi-hop web research, weekend refreshes, where users value depth over immediacy. These flows prioritize reasoning depth and cost efficiency.

This routing is transparent to users, encoded in product UX (e.g., “speed” vs. “depth” modes) and reinforced with clear expectations: when you’re interacting live, responses stream immediately; when you kick off deep research, results arrive when they’re genuinely complete.

Cerebras powers the Fast Path

Across Rox’s entire platform, responsiveness drives adoption and habit. When users are chatting with Command, speaking hands-free, tapping “refresh” in a research grid, Cerebras delivers ultra-low-latency inference that makes Rox feel instantaneous

Cerebras is also useful inside orchestration when short, frequent model calls gate the next step (planning, routing, quick verification, summarization). Keeping those hops fast maintains flow and reduces user-visible wait time across the entire chain.

For deep path workloads, Rox routes to larger models that excel at multi-step reasoning. Those jobs often run in the background (scheduled or batched), writing back to Rox’s knowledge graph and notifying the user when ready.

Economic alignment falls out naturally: deploy premium speed precisely where it creates user value, and use heavier models only when the task genuinely benefits from increased quality.

The experience and impact of speed

For sellers, Command has become the natural starting point for work. They can ask for meeting prep, generate follow-ups, or summarize long threads, and Command instantly orchestrates the right tools such as CRM, calendar, enrichment, and email to return one coherent, explainable thread that streams in real time. The result feels less like querying a system and more like having a capable partner in the loop.

Voice takes that interaction even further. With fast inference as its backbone, hands-free conversations feel natural enough to use while driving, walking between meetings, or navigating a deck. Reps stay in flow, speaking tasks instead of typing them, without the awkward latency that breaks rhythm.

For heavier workloads like research, Rox balances depth with immediacy. Large-scale account discovery and enrichment jobs run continuously in the background, while "I need it now" queries trigger a fast, interactive pass that delivers instant value followed by deeper synthesis once it is ready.

These product choices have a measurable business impact. Teams report higher daily engagement with Command as responses reach "instant" territory, and adoption climbs even further when chat and voice cross that invisible latency threshold. Deep research automations quietly remove hours of manual prep without interrupting the user’s day. Together, these gains mean more meetings prepared on time, faster follow-up execution, and a sharper, more responsive revenue organization.

Scaling fast inference with AWS Marketplace

As Rox continues to expand across global sales teams, scalability and procurement simplicity have become just as important as latency. The same Cerebras Inference that powers Rox’s fast path is now available directly through AWS Marketplace, making it easy for any enterprise to adopt the same architecture – pay-per-token, no infrastructure required.

Teams can simply integrate via API, set spend limits, and start serving real-time inference within the same AWS billing and security framework they already trust.

Whether it’s a chat interface, voice experience, or agent orchestrator, any product that benefits from instant response can now deploy the same foundation Rox uses directly from AWS Marketplace.

Get started

Experience real-time AI performance with the same infrastructure that powers Rox’s agentic workflows.

Cerebras Inference is available today as a fully managed service through AWS Marketplace.